OpenAI launches Codex: An 'Software Engineer' agent that runs parallel tasks

Just the other day I wrote a long post about Anthropic's Claude Code (triggered by a Jeff Dean Q&A) and I noted that OpenAI's Codex CLI equivalent was pretty limited in comparison (so far, anyway!)

With the noise building about what Google might bring to market soon, OpenAI just announced their latest release in the form of Codex.

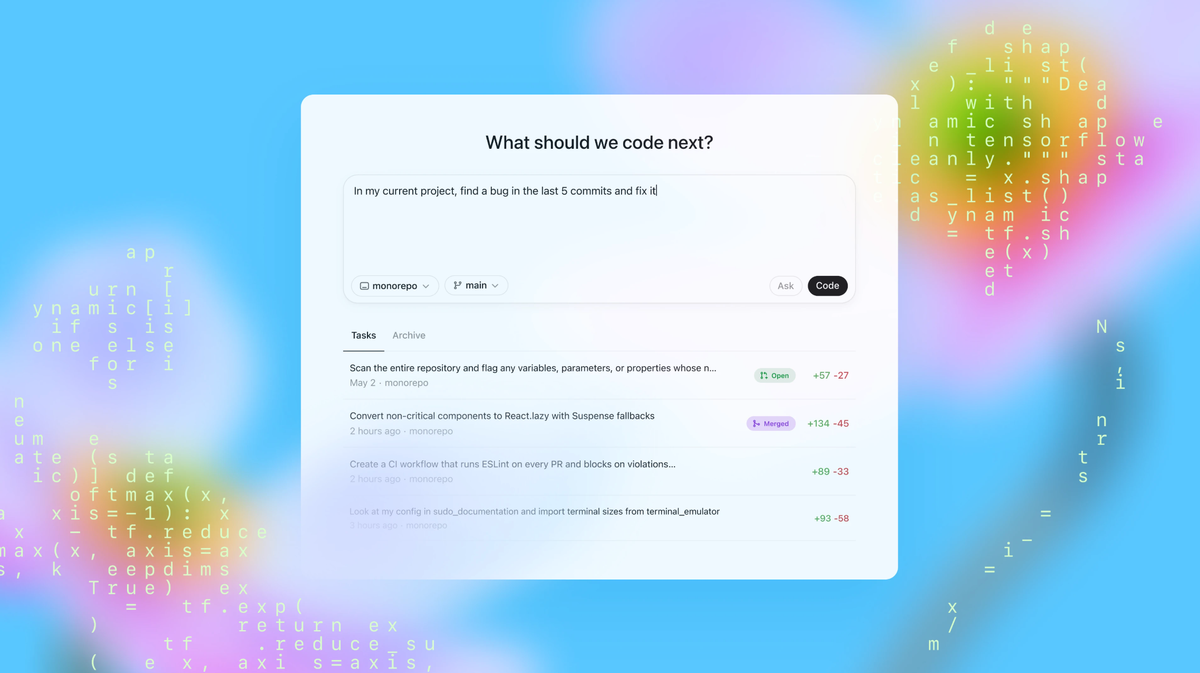

I was hoping they might bring a rather exciting update to the Codex CLI system to bring even more competition for the Anthropic team. But no. The OpenAI team has taken a slightly different approach, launching a, "cloud-based software engineering agent that can work on many tasks in parallel, powered by codex-1. Available to ChatGPT Pro, Team, and Enterprise users today, and Plus users soon."

I really like the lateral thinking.

Instead of a system sitting on your desktop or server, they're approaching things another way. The Codex 'agent' will access your code from (for example) your github repository and then act accordingly in terms of discussing, explaining, modifying and adding code to it.

The idea that it could do multiple tasks in parallel is extremely exciting – and that, I would imagine, is the base level thinking behind having Codex work in a cloud environment. Once it knows what to do, it can spin up many instances to rapidly complete tasks at-the-same-time.

Right now, me and my Claude Code buddies (I have 5 open at the moment) are all working sequentially – on one thing at a time. Parallel would be awesome. Indeed, one of the reasons I have multiple Claude Code instances operating is because it's all sequential.

Count me in as highly intrigued.

I will endeavour to check it out as soon as I can.

Meantime, here is the live stream replay - it's about 25 minutes if you'd like to watch the intro from the team: