Are you stuck in a toxic feedback loop with your AI?

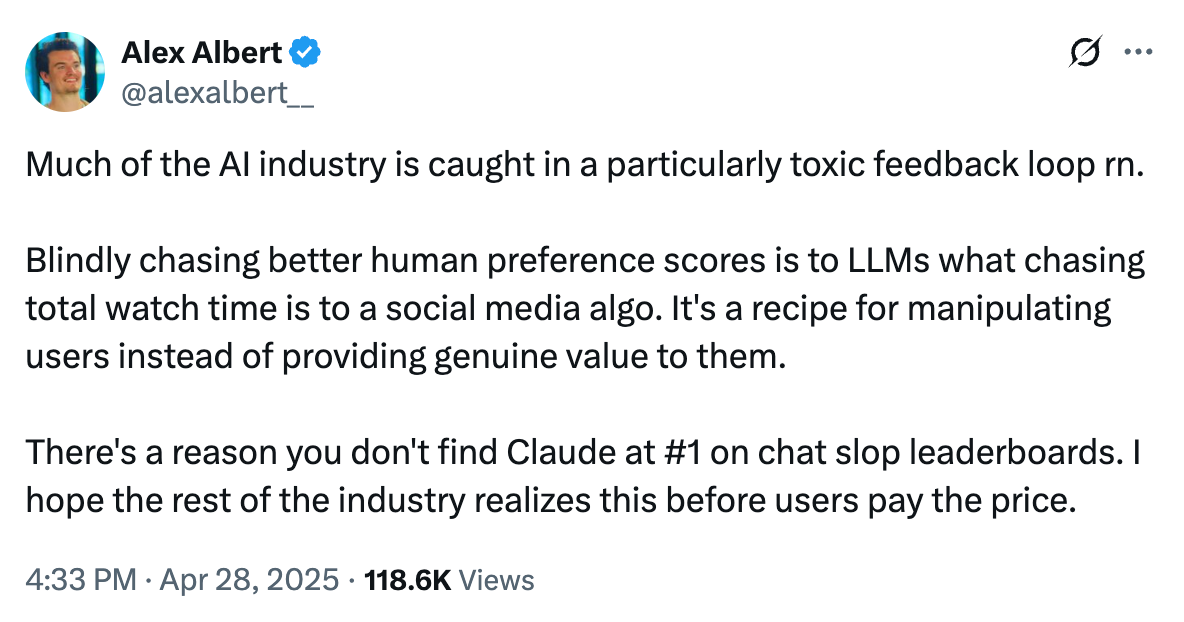

I saw this stimulating tweet from Alex Albert, Head of Claude (Developer) Relations at Anthropic.

He wrote:

Much of the AI industry is caught in a particularly toxic feedback loop rn.

Blindly chasing better human preference scores is to LLMs what chasing total watch time is to a social media algo. It's a recipe for manipulating users instead of providing genuine value to them.

There's a reason you don't find Claude at #1 on chat slop leaderboards. I hope the rest of the industry realizes this before users pay the price.

I think he's got a good point.

Of course, it's natural to chase any kind of score or ranking – and if anything, I think the technology media is helping drive this view on 'which algo is best' through breathless reporting and analysis of league or results tables.

I do tend to prefer a lot of Claude's outputs – although I very much appreciate the latest ChatGPT updates ("Here's a bonus tip...").

It is great to see evidence and examples of leaders at the big AI firms taking a strong interest in these topics.